Since 2022, Dr. Gloria Washington has been spearheading an approach to the artificial intelligence boom that places people at the center. As director of Howard’s Human Centered Artificial Intelligence Institute (HCAI), funded by the Office of Naval Research (ONR), she and her team of researchers are ensuring AI is useful for the actual people it serves. This includes other HBCUs, industry, and government.

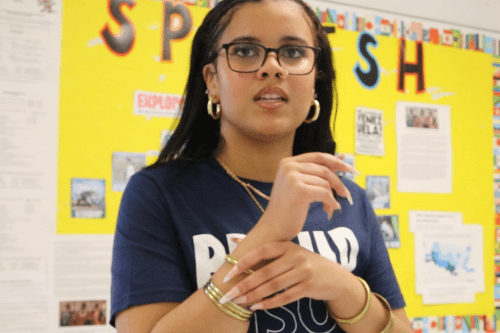

You may already be familiar with Washington’s work in AI; this summer, Howard announced the completion of the Google-sponsored Project Elevate Black Voices. Led by Washington, the project provides a database of more than 600 hours of recorded African American dialects from across the United States. Designed to reduce errors in recognizing different types of dialects in automated speech recognition systems, Washington says future work on this project will help to build a consortium of HBCUs to investigate how to most effectively preserve, safeguard, and use this data in future technologies, including AI.

“That is exciting because the HBCUs that we have already worked with in the past have been excited about using the data set and also about developing these fair usage guidelines around how AI is impacting the larger community that speaks dialects African American English,” said Washington. “It’s really interesting. This is a project that is ongoing and we believe will take us into a new realm.”

As the spring 2026 semester approaches, Washington and the HCAI staff continue to push the boundaries of how AI can best serve people, even when they’re under unbelievably high pressure.

Assisting Decision-Making Under High Stress

HCAI’s current research is focused on the improvement of tactical decision-making under high-stress situations. Specifically, Washington’s team of researchers is working to design chatbots from large language models (LLMS) — AI models that are self-trained on vast amounts of text — and “extended reality” tools to help naval officers make better-informed decisions in the field. The effort is enormous and complex, requiring the development of models on tightly limited military datasets, simulations of high- and low-stress environments, and in-depth research into the effects of stress on cognitive load and situational awareness.

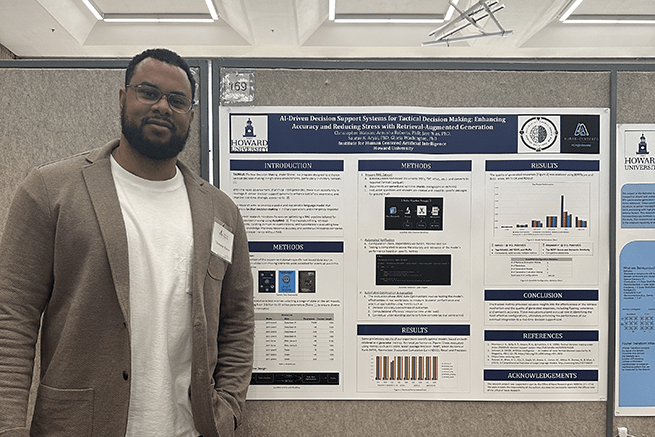

“What this tool is intended to do, is to help [make] decision making less burdensome,” explained third-year doctoral student, software engineer, and former educator Christopher Watson. “So, it’s going to be a large language model paired with an augmented reality component that can interface with the model.” Simply put, it will turn text output into interactive augmented reality displays showing the importance of decisions using colors, icons, and other graphic information.

Watson works on the LLM side of the project, fine-tuning the Tactical Decision-Making Under Stress (TADMUS) model that will integrate a technique known as retrieval-augmented generation. In this process, the model is trained to access a database of external materials — in this case, military protocol documents — and search for any potentially relevant information before responding to the user. This reduces hallucinations in the model and can help reduce the cognitive load on the user, who may have trouble remembering exact protocols in high-stress situations.

To be usable in the field, however, the model needs real-world context to respond to, which has proven to be a challenge. Unsurprisingly, detailed images of active naval vessels are scarce and largely confidential, meaning the dataset to simulate real-world naval missions is limited.

This is where the work of Senior Research Scientist Saurav Aryal (B.S. ’18, Ph.D. ’21) comes in. After scouring YouTube, Aryal was able to find around 100 images of 30 different vessels — not nearly enough to reliably be used to train the model. But by augmenting the images through flipping and zooming in, they were able to push that number higher. To make the images appear farther, which is more useful for naval missions and even more difficult to source, his lab turned to AI.

“The idea was, could we use generative AI to fill in the background of an image and thereby kind of make it appear as if it’s far away,” Aryal explained. “We can zoom in to make it appear closer, but we couldn’t previously make it look far away. And from a set of 100 images, we ballooned it to around 1,000, pushing it farther and farther out, and it’s been promising.”

A Tool Designed for People

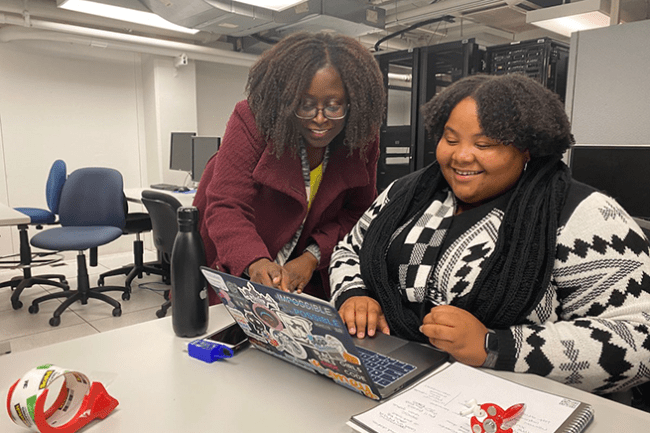

The accuracy of the TADMUS model means little if it isn’t useful for real humans. Ensuring the tool is effectively reducing stress and cognitive load requires merging computer science, design, and psychology.

Senior Research Scientist Dr. Lucretia Williams’s work focuses on human-computer interactions, particularly within health and education. For the ONR project, her lab is testing how stress in particular can affect decision-making.

“Specifically, I created two simulated environments, one calm and one stressful,” she said. “The calm environment includes light ambient noise, similar to what you’d hear in a coffee shop, and ample time to read and make a decision. But the stressful environment includes loud background noise, people may be yelling commands, and a super tight time constraint.”

While we are working on training this large language model and being able to use AI to provide better information, that's only half of the problem.

As a pilot test, Williams has been running the simulations with students, asking them to respond to prompts using TADMUS in either the calm or stressful environment. Students also take a NASA Task-Load Index questionnaire, designed to measure the cognitive load of a task, as well as a perceived stress scale questionnaire. The results from this research help to further refine the model, allowing the researchers to pin down what type of information it should be providing to effectively reduce stress in different environments.

Just as important as what information the model provides is how it provides it; especially in high-stakes scenarios, making sure the model is a tool and not another distraction is crucial.

“We focus a lot within computer science on just the technical aspect, but what does that look like in an actual functional state? While we are working on training this large language model and being able to use AI to provide better information, that's only half of the problem,” said Senior Research Scientist Simone Smarr, Ph.D., who’s work focuses on applying the text-based model to augmented-reality tools. “Because then the question is, ‘How are we going to display that information?’ And we’re trying to explore this different and more interactive way of displaying.”

Smarr’s lab is still trying to find the best augmented reality device for the job, but is narrowing down on wearable glasses, similar to the Ray-Ban Meta glasses, that can quickly provide information to those on naval vessels. To ensure the final form is intuitive for users, she is drawing on her experience in UX design, and her team is constantly testing methods for alerting users.

“We have an alerting system that's built within the system,” Smarr explained. “So, the questions are, one, is that clear, but also is it helpful? At what point is it that this is just too much stuff going on? Within a cognitive load space, especially within I think augmented reality, it's the same kind of UX design problems which I'm really interested in because I’ve done a lot of general UX, and it’s the same thing with your computer or when things are too much type of things on a screen.”

Dr. Jaye Nias, who is leading the assessment of TADMUS’s accuracy, encapsulated the interdisciplinary, boundary-pushing spirit of HCAI best, reflecting on the tool’s civilian use in high-stress moments we experience every day, like driving.

“I think all the things we do always have an application to some other part of our lives,” said Nias.

A New Horizon of Human-Centered Research

While currently focused on naval missions, the Institute’s researchers see potential applications in a wide range of fields. The tool could one day be used in any situation where decisions need to be made quickly, including medical emergencies, evacuations, and disaster responses. Additionally, Aryal sees potential for his image augmentation research in any field where data is scarce, naming everything from astronomy to satellite image analysis. Williams, meanwhile, envisions a version of her lab’s simulation tests that can gauge the effectiveness of AI educational tools, an important area of research as AI technology increasingly enters classrooms.

Within the Institute, the researchers are not only creating software innovations, but they are also leading the way in showing how the nation’s workforce can come to Howard to learn advanced skills in artificial intelligence.

Under Washington’s leadership, HCAI serves as a declaration of Howard’s position as a leader in tech, ensuring the next generation of computer scientists remain at the cutting-edge of AI research, without forgetting the human element.

“Howard has always been leading the way in the creation of STEM professionals. Within the Institute, the researchers are not only creating software innovations, but they are also leading the way in showing how the nation’s workforce can come to Howard to learn advanced skills in artificial intelligence,” Washington said. “We’re studying how our unique way of mentorship and teaching of young scientists are creating a new workforce for occupying these future technical jobs.”